I was expecting my inaugural T-SQL Tuesday post to not happen this month… Until just yesterday, when I found myself helping explain to someone what an Active/Active SQL cluster was and what it wasn’t. Ah hah! I expected this to be a topic already covered fairly well, and as expected, I found a couple of really good articles/posts by others on this topic. I will reference those in a bit, but I can cover some more general ground first.

The misconception that was I was explaining yesterday morning is the same one that I’ve explained before and have seen others do the same: An Active/Active SQL Server Cluster is not a load-balancing system. It is, in fact, purely for High-Availability purposes. If what you are really after is true load-balancing, unfortunately, you will have to go elsewhere, to something like Oracle RAC.

When I say “true load-balancing”, I mean being able to load-balance a specific set of data across multiple pieces of hardware. What you can do with Active/Active clustering is load-balance a set of databases across multiple pieces of hardware. This is useful if you have a SQL Instance with multiple very heavily-utilized databases—one or more of these DBs could be pulled out to its own instance on separate hardware. This is not done without potentially serious tradeoffs, however, which will be covered in a bit.

The underlying reason for this is that Windows Clustering is only designed for HA purposes. With its “shared nothing” approach to clustering, any given resource within the cluster (say, the storage LUN that holds your accounting software’s MDF file) can only be controlled by one node of the cluster at a time. If the accounting DB’s home LUN is currently attached to the first node in a cluster, it’s going to be pretty hard for the second node to service requests for that database.

OK, what is Active/Active Clustering, then?

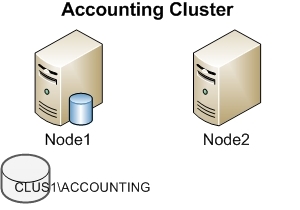

I like to think of it as two Active/Passive clusters that happen to live on the same set of hardware. To illustrate:

This example cluster is a two-node Active/Passive cluster that contains a single SQL Server Instance, CLUS1\ACCOUNTING. This Instance has its own set of drive letters for its DB, Log, and TempDB storage (say, E:, F:, and G:). If both nodes are healthy, Node2 never has anything to do. It sits around twiddling its bits, just waiting for that moment when a CPU melts down in Node1, and it can take over running the ACCOUNTING instance to show how awesome it is.

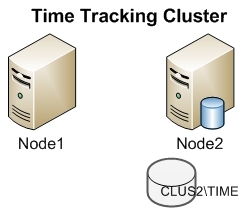

This is another two-node Active/Passive cluster, running a single instance, CLUS2\TIME (you know, because internal time tracking software is that important). Like CLUS1\ACCOUNTING above, it has its own set of drive letters for its storage needs (M:, N:, and O:)In this case, the SQL Server Instance is on Node2, leaving Node1 with nothing to do.

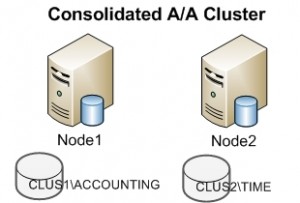

What do we get if we mashed these two clusters together?

We would get this, an “Active/Active” SQL cluster. It’s logically the same as the two previous clusters: it just so happens that instead of the second node doing nothing, it is running another independent instance. Each instance still has its own set of drive letters (E:, F:, and G: plus M:, N:, and O:). Just like in the “Accounting Cluster” above, if Node1 were to fail for some reason, the CLUS1\ACCOUNTING instance would be started up on Node2, this time, alongside CLUS2\TIME.

So, is doing this a good idea?

Maybe. Above, I talked about how a single instance Active/Passive cluster with more than one heavily-used DBs could be re-architected into an Active/Active cluster with some of the heavy DBs on one instance living on one node and the rest of them on a second instance living on the other node in the cluster. This gives you both High Availability and you’ve spread the load out between two servers, right? It does, but what happens if one of the nodes fails and the two instances “clown-car” onto the remaining node? Are there enough CPU & RAM resources on the remaining server to support the combined load?

That illustrates one of the major things to consider when thinking about deploying an Active/Active cluster. Tim Ford (Blog | @sqlagentman) has an article over at MSSQLTips that goes into detail about making this decision.

In addition, Aaron Bertand (Blog | @AaronBertrand) has a sweet little piece of automation to help manage resources when trying to squeeze the maximum amount or size of clowns into a single car without someone’s foot sticking out in the breeze. Of course, it’s something that you hope never actually gets used, but it’s a good way to wring the most performance out of an Active/Active cluster.

Conclusion

I don’t know if this is the best way to explain this concept, but I like it. The Active/Passive cluster concept is usually understood, so I believe it is a nice stepping stone to get to the truth about Active/Active clusters. As with everything, there are tradeoffs and gotchas that need to be considered when designing server architectures like this, and the above-mentioned others have gone a long way to cover those topics.

There we have it, my first T-SQL Tuesday post. Hopefully it is useful and not from too far out of left field 😉

Assuming you used only two nodes as an illustration, what about having a cluster of multiple active nodes with one (or maybe two, depending on the size of the cluster) passive in case of a failure? Seems like that would reduce having pairs of computers running just one instance each, but there is still a backup if need be.

Then again, I know nothing at all of this, so I’m more or less just talking out of my butt.

Yeah, I just used two as an example.

Sure, you can have something like an Active/Active/Passive cluster with 2 or more smaller instances on it. You’re right in that such a situation allows you to really max out things like the RAM settings on the instances, because in the event of a failure, instead of everyone clown-caring onto one node, there’s a whole empty one that things can move to where they (theoretically) will have the same quantity of resources.

Of course, if there’s a hell-bent desire to not have any hardware sitting there doing nothing (you know, because that doesn’t make you any money, ahem), then you’re going to have to set stuff up one way or another to deal without an empty machine.

And, thanks for single-handedly giving me comment traffic, heh 😀

Heh, yeah, the whole corporate ideal of “I don’t want to waste any money now, so let’s waste it later.”

Hee hee, no prob! It’ll pick up; just might take a bit. (I’ve only ever had a handful of people comment on my blog, so I hear ya.)

IF I were to setup a active/active/passive. lets say 3 nodes, 2 cluster instances, one box sitting , idling, waiting , etc… How would the aliasing work for the failed node in the cluster, to make sure that the node would go to the idle machine and not on the other active node? If this is an obvious question let me know, as I am fairly new to sql server clustering.

Hi Bruce,

Clustering doesn’t have any automatic, built-in load-balancing like that.

However, what you can do, is configure the Preferred Owners on each of the clustered instances. You can get to this setting by going to the “Services and applications” screen of the cluster manager for the cluster in question, then going to Properties for the SQL instances shown. It’s there on the General Tab.

So, what you would do, is set the order of servers you’d want the instance to fail over to. In your example, for both of the instances, you’d put their “home” node first in the list, then the “idle” node second. The other “active” node of the cluster would be last.

What then happens in the event of a failover, is the instance should fail to the next available node on that list. Since the “idle” node is next, the instance would fail to that one.

Now, of course, if you had multiple failures for whatever reason, both instances would clown car onto that originally idle node, but, if you’ve had multiple failures like that, that might be the only machine still alive, so they would have both wound up there, anyway 😉

Clear as mud? 😉

Windows Failover Clustering is shared-disk, not shared-nothing. I know what you mean, though. And thanks for the post, I’ve heard several people talking active-active as if it were a way of load balancing the same data across multiple servers like MySQL Cluster or Oracle RAC.

Never mind. : ( You’re right, it is a shared nothing model, albeit with the disk as a single point of failure.

No worries 🙂 Yeah, plain ol’ Windows Failover Clustering is almost like the worst of all worlds–no load-balancing between machines/instances and there’s still a single point of failure (more or less).

This is where AlwaysOn Availability Groups comes in, though. There’s still an “old-school” cluster involved, but one does get some semblance of load-balancing, as long as the load you want to balance is a read-only load like reporting or ETL Source.

(Aside: “Active/Active” is such a terrible phrase, and this confusion is why. But, I admit, it rolls off the tongue so much easier than “Multi-Instance Two (or three, four, whatever) Cluster”!)

Thanks for your comments, James!