Procedures and Functions. Well, this could be interesting. Everyone else’s posts, that is.

OK, T-SQL Tuesday Twenty-Four: Two-year anniversary edition! Pretty sweet of Adam Machanic (blog | @AdamMachanic) to come up with this whole thing two years ago. A good guy, he is. This month’s topic is Prox ‘n’ Funx, brought to all of us by Brad Schulz (blog). I kind of feel bad here—I don’t really know much about Brad, but he’s an MVP, and flipping around his blog brings up some pretty cool posts. I really like this one, for example.

I actually have what I think is a decent little thing to talk about for this topic. It could have been a stretch topic for T-SQL Tuesday #11 about misconceptions, even if [I hope] not exactly a widespread one. This topic revolves around transaction control and error handling within stored procedures, which can be a crazy topic in and of itself. Books could be written on the topic. Specifically, the conversation that I found myself involved in one day was about what happens to any open explicit transactions when a procedure runs into an error.

Let’s Talk About Procs Dying

Once upon a time, someone said to me that if an error occurs within a procedure which contains an explicit transaction, that transaction will remain open, potentially blocking other sessions on the system. In short, that’s not true, and it’s fairly easy to prove. We’ll work through a quick little script to do this, and will include modern TRY…CATCH error handling, because that will come in when we get to the main point (I say “modern” there like it’s a new thing, but coming from a guy who has worked with SQL 2000 more than anything else, that distinction feels necessary). It actually doesn’t matter if there is any error handling during the first part of this exercise, as the results will be the same. This is a pretty contrived setup, but that’s pretty much what I’m going for.

First, create a table & put a couple of rows into it. The two columns we’re most worried about are “FakeNumberColumn” and “ActualNumberColumn” (note their data types), so named because the error which will be triggered in a little bit will be a type conversion error.

-- Create Table

CREATE TABLE dbo.TransactionTest2008

( TransactionTestID INT NOT NULL IDENTITY(1,1),

RowDescription VARCHAR(50) NOT NULL,

FakeNumberColumn VARCHAR(10) NOT NULL,

ActualNumberColumn INT NULL

)

;

-- Populate it with a couple of rows

INSERT INTO dbo.TransactionTest2008 (RowDescription, FakeNumberColumn)

SELECT 'Actually a Number 1', 10

UNION ALL

SELECT 'Actually a Number 2', 100

;

Now for the really contrived part: a Stored Procedure that will throw an error if FakeNumberColumn contains something that won’t implicitly convert to a numeric:

CREATE PROCEDURE TransactionTester2008

AS

BEGIN try

BEGIN TRAN

UPDATE dbo.TransactionTest2008

SET ActualNumberColumn = FakeNumberColumn * 2

-- select *

-- from dbo.TransactionTest2008

-- Wait for 10 seconds so we have a chance to look at DBCC OPENTRAN (unless of course it dies first)

WAITFOR delay '00:00:10'

COMMIT TRAN

END try

BEGIN catch

-- Some kind of error has occured

PRINT 'Welcome to Catchville'

IF @@TRANCOUNT > 0

ROLLBACK

-- Raise an error with the details of the exception

DECLARE @ErrMsg NVARCHAR(4000), @ErrSeverity INT

SELECT @ErrMsg = ERROR_MESSAGE(),

@ErrSeverity = ERROR_SEVERITY()

RAISERROR(@ErrMsg, @ErrSeverity, 1)

END CATCH

(The commented-out SELECT statement can be un-commented if you like, to see the state of the table at that point of execution.)

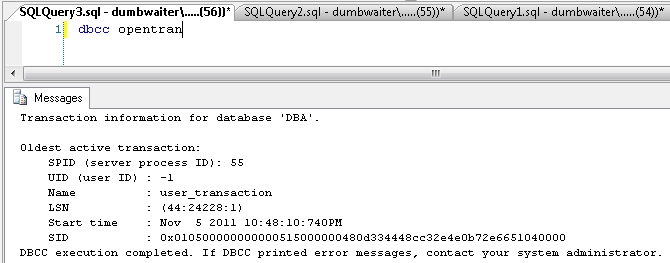

As it is now, the proc will run successfully. The 10 second WAITFOR in it gives you time to run DBCC OPENTRAN in another query window to see the proc’s open transaction.

EXEC TransactionTester2008

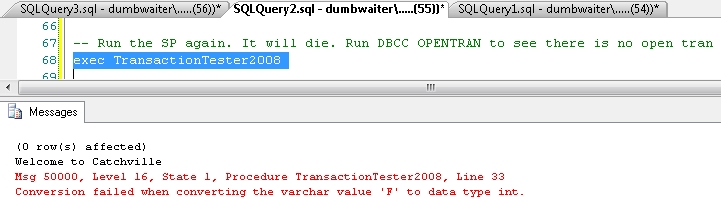

Now we’ll make things somewhat interesting. Insert another row into our table to put a letter into FakeNumberColumn, then run the proc again.

Now we’ll make things somewhat interesting. Insert another row into our table to put a letter into FakeNumberColumn, then run the proc again.

INSERT INTO dbo.TransactionTest2008 (RowDescription, FakeNumberColumn)

SELECT 'Not really a Number', 'F'

EXEC TransactionTester2008

Things won’t go so well this time…

We get the PRINT message about being in Catchville, so we know that our exception was caught and execution finished in the CATCH block. At this point, go run DBCC OPENTRAN again, and you will see that there isn’t a transaction open. This would be the expected behavior. No transactions are left open; the in-process activities are rolled back.

We get the PRINT message about being in Catchville, so we know that our exception was caught and execution finished in the CATCH block. At this point, go run DBCC OPENTRAN again, and you will see that there isn’t a transaction open. This would be the expected behavior. No transactions are left open; the in-process activities are rolled back.

I should also note that a less-severe error, such as a constraint violation on an INSERT, will only cause an error in that particular statement. The Engine will skip over that statement & continue processing normally. That behavior has led to some near-brown pants moments while running a huge pile of INSERTs, putting in some provided business data, but that’s what explicit transactions are for!

Now, About Timeouts…

OK, that section wound up pretty long. Here’s where I’m actually getting to what I want to talk about…

We’ve established that errors in Stored Procedures will not lead to transactions being left open under normal circumstances. There is a situation where things don’t go so well: when a client/application connection times out for one reason or another. If this happens, the client side will close its end of the connection, and after the in-progress query SQL Server is running completes, nothing else really happens. This can leave open transactions, which, of course, are bad, bad, bad.

Starting with where we left off above, we can simulate an application timeout by cancelling the running SP in Management Studio.

First, delete the error-producing row from dbo.TransactionTest2008:

DELETE FROM dbo.TransactionTest2008

WHERE RowDescription = 'Not really a Number'

Execute TransactionTester2008 again, and this time, while in the 10-second WAITFOR, cancel the query in Management Studio. Even with the TRY…CATCH block in place, the explicit transaction is left open (check with DBCC OPENTRAN). What this means is that whatever application (or DBA!) running the statement(s) is responsible for closing an open transaction if a session times out or is cancelled. In my experience, if one is in the habit of wrapping everything you do in explicit BEGIN/COMMIT/ROLLBACK TRAN, they’ll be less likely to cancel a script they’re running and then sit there blocking half of the rest of the world. Not that I’ve been there, or anything…

There is a safety net here: XACT_ABORT. I first learned about XACT_ABORT while doing some work with Linked Servers a few years ago. What XACT_ABORT does when set to ON is to force SQL Server to terminate and roll back the entire batch if any error occurs. Here’s the Books On Line page for XACT_ABORT.

In our case, flipping that setting to ON within our test SP will change what happens when a query timeout (or cancel) happens. Add “SET XACT_ABORT ON” at the begging of the above SP and re-create it thusly (either drop and recreate or add the line and change it to ALTER PROCEDURE):

CREATE PROCEDURE TransactionTester2008

AS

SET XACT_ABORT ON

[…]

Run the SP as before, and again, while in the 10-second WAITFOR, cancel the query. Now if checking for an open transaction, there won’t be one—it was rolled back by the engine when the timeout (cancel) occurred, because of XACT_ABORT. No locks are still being held, no other sessions will be blocked by the timed-out session. Smooth sailing 🙂

As Usual, Be Careful

Before hauling off and adding this to a bunch of SPs, beware the Law of Unintended Consequences. Test extensively, because no matter how self-contained a fix may seem, who knows what else may be affected. This is no where this is as true as it is in old, overly-complicated, poorly-understood legacy systems. I know DBAs like to fix problems and prevent problems from happening in the first place, but please make sure that no new issues are introduced while trying to “fix” things.

Oh; don’t forget to clean up after yourself!

DROP TABLE dbo.TransactionTest2008

DROP PROCEDURE TransactionTester2008