If you’re not already familiar with this function, I may be about to become your hero. Or, you’ll keep using the Input/Output wizard, some T-SQL, or straight-up SSIS to do this sort of thing and this post won’t matter. Either way, this was new to me a while back and its simplicity and usefulness (at least in our environment) blew my mind at first.

The CONCATENATE function in Excel itself is pretty straightforward; it’s a one-trick pony that does exactly what you think it does: concatenates strings together. In fact, Excel’s description of the function isn’t that much more complicated: “Joins several text strings into one text string.”

Couple notes about CONCATENATE…

The first one has to do with its behavior. CONCATENATE will combine anything in Excel cells, even things that classify as “numbers” that can be used in other arithmetic-based functions. Translated, this means if you have “12” in one cell and “78” in another, using CONCATENATE with those two cells will have a result of “1278.”

The second point is about its source data. Obviously this can be used to concatenate cell contents together into another cell, but strings can be put directly in the function definition, too. This means text can be inserted into the result directly via the function call—it doesn’t need to already be in the Sheet somewhere.

What’s it good for?

The primary use case for this function in my life is when I’m on a project that involves either adding new or updating existing data based on some Excel sheet of Business data that gets handed my way. I’ll use this to build UPDATEs or an INSERT statement to get this data into the DB quickly and easily.

I’m going to build a quick example using the Sales.SalesPerson table in AdventureWorks.

For the sake of argument, let’s say that everyone is getting their commission rate adjusted and it’s going to be easier to do it directly in the table instead of through the UI (this would be more plausible if the company were larger and had more than 17 rows in this table, but that’s my story and I’m sticking to it). Or, maybe there isn’t a UI, because “when or why would we ever need to change the commission rates? Don’t waste time on that interface.” But that never happens.

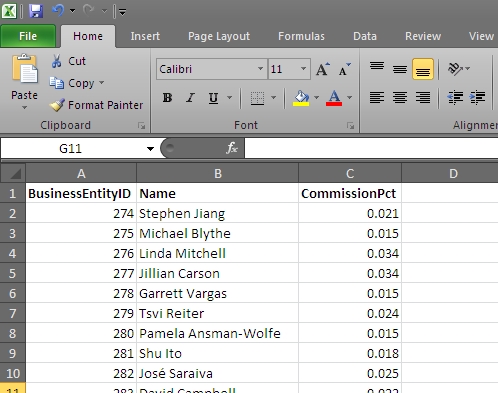

Anyway, the VP of Sales sends you the following Workbook that contains new commission percentages for all of the sales folk:

I’m making this somewhat easy by including the BusinessEntityID column, which is the Natural Key for this data. Such data makes this exercise quite easy, and it’s usually possible to get the business to include this important piece of data as long as you know this activity is coming and can talk to them ahead of time.

When building statements with CONCATENATE, I usually start out in SSMS and manually type out what the first statement would be:

UPDATE sales.SalesPerson

SET CommissionPct = 0.021

WHERE BusinessEntityID = 274

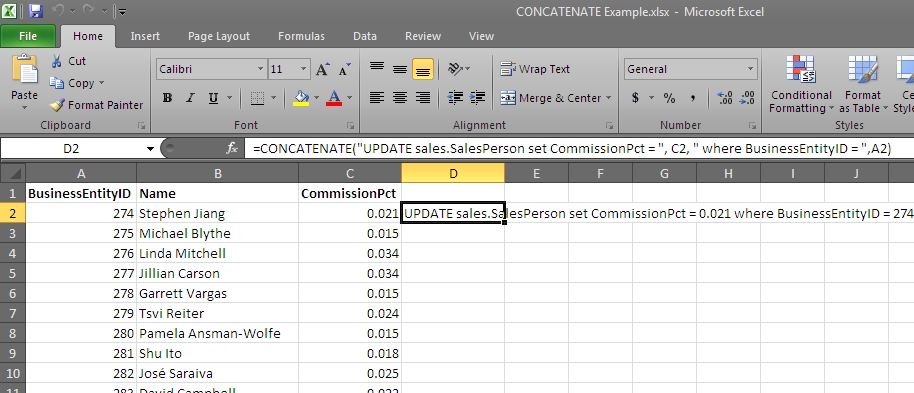

Most of the time, I type out the Excel function call manually based on this initial statement, but it can be copied in as well, after stripping off some of its formatting (line breaks & tabs). The values for the SET & WHERE clauses are replaced by the cells that contain those particular pieces of data. Due to the way the CONCATENATE function works, the “pieces” to concatenate are separated by commas. Wrap plain text to include in the final result in double quotes. The end result should look something like this:

CONCATENATE("UPDATE sales.SalesPerson set CommissionPct = ", C2, " where BusinessEntityID = ",A2)

Put an equals sign at the begging & paste that into Excel. Some magic happens and, bam, UPDATE statement:

Copy that out and paste it in SSMS underneath the one written earlier & compare the two. If they match, the formula is good. The rest is simply a matter of filling the series down. All of the necessary UPDATE statements are done! Don’t skip checking those over to make sure nothing crazy is going on.

The same thing can be done with INSERT statements if a big block of new data is going into a table. Even if you prefer to do a single INSERT statement instead of discrete ones, CONCATENATE is still useful to build SELECTs and UNION/-ALLs to feed into a single INSERT:

CONCATENATE("SELECT '", B3, "', getdate(), ", A3, " UNION ALL")

Useful?

I think it is. Even if you usually use SSIS to do this type of work, I bet there are still times when using Excel to cook up some UPDATE statements in a hurry is both quicker and easier than writing out a package to do it, or maybe even than the Import wizard.

I use this on a regular basis and believe it’s one of the more useful non-BI or Expense Report things you can do with Excel 😉