We all know the best practices for SQL Server service accounts–domain account (if you’re using Active Directory), non local admin, different one for each service (and server/instance), etc, etc. These are, of course, good best practices and they should be followed as closely as possible in Production and on servers/instances that house Production data.

We all know the best practices for SQL Server service accounts–domain account (if you’re using Active Directory), non local admin, different one for each service (and server/instance), etc, etc. These are, of course, good best practices and they should be followed as closely as possible in Production and on servers/instances that house Production data.

A problem arises if you have more than just a couple-few servers or run some of the BI components, however. The number of service accounts involved in your SQL Server plant could be very large, necessitating an incredible amount of overhead when it comes to managing those accounts. This goes beyond simply creating and assigning them–chances are good that there are policies in place that require changing passwords. User accounts, service accounts, and other automation accounts likely all fall under this umbrella. If you’re lucky, maybe non-user accounts have a longer change interval, but it’s still something that is going to need to be done on a regular basis. In large environments, this could take an excruciatingly awful amount of time to do.

All of this is not to mention the human factor involved here. One of the recurring themes in a couple of my presentations is making an effort to automate as many things as possible to remove the human from the process. Not that we’re bad, but there are some things, especially tedious and repetitious tasks, where dumb things go wrong simply because of the nature of the work. Changing a bunch of service account passwords is definitely one of them. There used to be two types of sysadmins: those that have changed a service account’s password but forgot to update and restart the service itself, and those who will.

Enter Group Managed Service Accounts

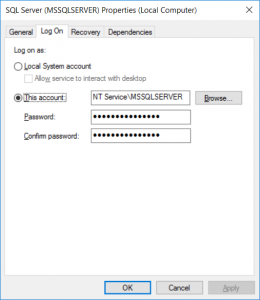

Group Managed Service accounts (gMSAs) are a way to avoid most of the above work. They are special accounts that are created in Active Directory and can then be assigned as service accounts. They are completely managed by Active Directory, including their passwords. This means no more manual work to meet the password-changing policy–the machine takes care of that for you.

There are other security-related controls that can be gained with them, but this is the major feature.

I’ll also note that you–the DBA–are likely to need some help from your AD admin to get these set up. They’re going to need to actually create the accounts for you in the system, and there may be some changes needed to their AD configuration in order to support them. They’ll also need to have a Windows Server 2012 (or R2) domain controller in their domain, but I’d hope today that’s not going to be a hurdle to overcome.

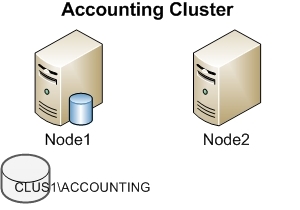

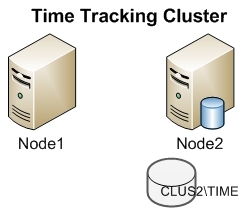

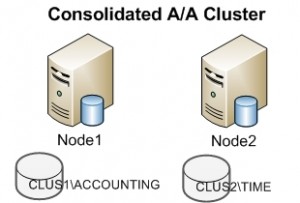

Since I’m mostly here to talk about SQL Server, I’ll note a couple of different support situations. gMSAs are supported from SQL Server 2014 and on running on Windows Server 2012 R2 and on for everything you can do with SQL Server–standalone instances, Failover Clusters, Availability Groups. Just plain Managed Service Accounts (MSAs) can go back a little further, but they only support standalone instances of SQL Server.

From a non-SQL Server perspective, one of the major disadvantages of gMSAs is that one can’t just use them everywhere. Services have to be specifically designed to support the use of these accounts, and that’s not going to be the case everywhere.

Since this isn’t exactly a new feature, there’s plenty of documentation and blog posts out there to read about this feature and the various requirements to implement. There’s a great overview and setup blog post on MSDN here: https://blogs.msdn.microsoft.com/markweberblog/2016/05/25/group-managed-service-accounts-gmsa-and-sql-server-2016/

That post links to this old TechNet article, which still is a pretty good resource for understanding what these things are and a little more detail on what is going on in the back-end: https://technet.microsoft.com/en-us/library/hh831782.aspx

Finally, my coworker Joey has a slightly older writeup here, https://joeydantoni.com/2012/12/14/group-managed-service-accounts/, that walks through the process of setting this up. Note that some of the requirements have changed since that was written, but the general process remains the same.

gMSAs are a nice feature that aren’t too onerous to setup, but go a long way to make your life easier and your data far more secure.