Hello T-SQL Tuesday Readers! I’m sorry for being really late in getting this post out this week.

So! A couple of weeks ago, for this month’s topic, I asked everyone to post about something that broke or went wrong, and what it took to fix it. Last week, fourteen of you responded with your stories of woe so we could all learn from your incidents and recoveries in a constructive way, like pilots do. Here’s the recap of those posts, in the order that they came in.

What Everyone Had to Say

First, is Rob Farley with, “That time the warehouse figures didn’t match“… heh, I feel like I’ve heard this one before. But it turns out, no! this is new and awesome. Rob talks about a fundamental rule he has when loading data into a data warehouse: “protect the base table.” This is his first step in ensuring that data in the DW is correct, and as anyone who does DW or BI work knows–that is always the most important thing, because if trust in the data coming out of the reporting system is lost, it can be pretty hard to get it back.

John McCormack has “Optimising a slow stored procedure” next. John walks through his process of tuning up a stored procedure he had gotten an after-hours call about being slow enough that things were breaking. He’s got a good tip in here if you use SentryOne’s Plan Explorer, too. AND, there’s an added bonus of including something that I find frustrating when it happens. Basically: “This web page is usually really slow and the users were frustrated about it, but nobody ever told me!” Y’all! Tell us (IT, support, whoever) when you’re not happy, we’re usually happy to fix things to make your life easier!

Richard Swinbank talks about that time he unintentionally set a trap for himself in “Default fault.” Changing the default database for your login in SQL Server when you’re the DBA is all fine until you decommission that database! Richard includes great steps for digging yourself out of this hole with sqlcmd if you’ve “locked yourself out” of the instance when using SSMS.

Eitan Blumin’s post about a mis-behaving set of Azure VMs has a good surprise twist in it. This post struck me as a little hilarious, both because of the 32 hours bit, but also because I/we had just been talking about fullscan statistics update in our internal corpchat this week. I like fullscan stats in general, when they can be pulled off and are helpful (think non-uniform data distribution), but when they take down your AG, that’s, uh, bad. Don’t do that. Eitan also includes a nice set of takeaways at the end of the post.

SQL Cyclist/Kevin3NF I think wins the prize this month for having the longest contribution. Turns out, he has an ongoing series of blog posts of real-life stories along these lines, which include seven posts about fun goings-on that fit into this topic.

Next is Jason Brimhall with “Disappearing Data Files“… Jason shares a pet peeve of mine, which is having to fix the same thing over and over again. When that happens, it’s good that one at least has/knows the fix, I guess…. Anyway, Jason goes into using Extended Events as an audit tool to detect changes to tempdb files to help track down the root cause for a “recurring fix”, and reiterates that sometimes those root causes are hard to track down, and the best place to put yourself in for those situations is to be ready the next time.

Deborah Melkin says, “I feel like it’s been a while since I’ve joined the party.” Ohh, I’m pretty sure it’s not as long as it has been for me… Deborah doesn’t have a specific break/fix story, but talks about how experiences and dealing with problems over time makes it easier to address new problems as they come up. This is the core lesson (lesson? Process?) I’m wanting everyone to get this month, so I appreciate this perspective!

Hugo Kornelis has my favorite post of the month. If you’re the type who doesn’t read all of the T-SQL Tuesday posts and waits for the recap to find the one or two that look the most interesting, make sure this one is on your list. It’s short and to the point, and contains a fantastic lesson. Two, really, although one isn’t explicitly mentioned as a lesson. This one is the fact that if there’s something you do on a regular or reoccurring basis, you should have that process written down. Think of it like a checklist (flying reference ahoy!). But what Hugo makes clear is that even if you’re doing something different that you don’t have a process for, but is adjacent to that process, it’s still a good idea to reference that process. Just read Hugo’s post, it’s easier than listening to me 🙂

I felt bad just reading Aaron Bertrand’s post. Just, go read it. Promise. Take his advice.

Lisa Bohm brings us what I think is a heartwarming story about what transparency and honesty can bring to even professional relationships. Lisa tells us about how this worked out for her while working for an ISV when things went bad on a Friday night (it’s always a Friday night). In addition to the honesty part, she had another takeaway that we can all do better at sometimes: Shut up and start listening.

Next up is STEVE JONES, the one that got me into this damn mess in the first place. He at least takes ownership of that in the post, heh. Steve’s speaking my language here with “And too few companies share their learnings publicly.” …This is a crab of mine, as well, and I think a lot of IT shops would make fewer bone-headed mistakes if everyone was more willing to share what they’ve learned, NTSB-style. I understand why things are the way they are, and all, but it doesn’t mean I have to like it. Anyway, Steve has many encouraging words for learning from others in the first place, and how he has been fortunate enough to be able to work with people who have helped him build better systems. I always appreciate Steve’s writing, and this post is no exception.

Glenn Berry wrote about a place where I think we’ve all been–it boils down to not RTFM 🙂 But also, it involves building PCs, which maybe most of us used to do, but hardly any do anymore. I still do, but only once every, like, eight years, so… This was funny, because as Glenn walked through how he got here, I saw right where this was going; I mean, with four disks to hook up, I would have gone right for that nice quad of stand-offs, too! I mean, why split the cables up between two places when you could just do one! Yeah. Uh-huh. Yep. Glenn’s main takeaway is, basically read the documentation, which is always a good idea. As someone who really likes writing it (I’m aware of how broken I am), I also know how little this happens in practice.

Todd Kleinhans talks about Letting it Fail. This is true–sometimes things have to break to get the right peoples’ attention, or to show just how bad a situation could get. Todd has a story about just one of these situations. I don’t think any of us like things getting to that point, but sometimes there aren’t other options. Todd also has a good final takeway, about “doing nothing” being a valid option to a situation, and it really is. May not lead to a good outcome, but it is an option!

Tracy Boggiano starts out with a line that is a big “been there, done that” for me: “One fateful night while I was not on call, I got a call around 3:30 AM.” Ahh yes. You’re not on-call, but you wind up on the horn with Ops, anyway. Tracy’s story has some good head-shaking items in it, which is about how I expect a story that starts like this to end. Tracy has a good line towards the end: “Everything from the network, to the server hardware, to the database creates the system and working as a team is the only way to make sure things are configured to perform and not fail.” Ain’t that the truth…

And finally, my man Andy Yun talks about Presentation Disasters. Andy comes through here with probably the most aviation-related lesson of all: Paranoia Pays Off. Yessssss! Always have a Plan B–plus C and D if possible–so when things go pear-shaped, you already have a plan. Andy was presenting at GroupBy back in May, when his headset died. But he was ready! Good lesson for all and everything here, not just those of us slinging TSQL or flying airplanes.

And that’s it! This was the first time I’ve hosted T-SQL Tuesday, and I want to thank everyone who shared their stories with us this week!

SQL Saturday in Cleveland, Ohio is next week, on February 3rd. If you’re in the area or can easily make it there, I hope that you can come out for a great day of free SQL Server training. I enjoy presenting at SQL Saturdays; they’re fun and educational days for speakers and attendees, alike. Last time we were in Cleveland it had snowed overnight when it was time to leave town on Sunday morning. I’ve lived even longer in the south now, so if that happens again, it’ll be even more fun this time.

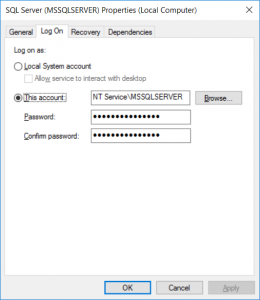

SQL Saturday in Cleveland, Ohio is next week, on February 3rd. If you’re in the area or can easily make it there, I hope that you can come out for a great day of free SQL Server training. I enjoy presenting at SQL Saturdays; they’re fun and educational days for speakers and attendees, alike. Last time we were in Cleveland it had snowed overnight when it was time to leave town on Sunday morning. I’ve lived even longer in the south now, so if that happens again, it’ll be even more fun this time. We all know the best practices for SQL Server service accounts–domain account (if you’re using Active Directory), non local admin, different one for each service (and server/instance), etc, etc. These are, of course, good best practices and they should be followed as closely as possible in Production and on servers/instances

We all know the best practices for SQL Server service accounts–domain account (if you’re using Active Directory), non local admin, different one for each service (and server/instance), etc, etc. These are, of course, good best practices and they should be followed as closely as possible in Production and on servers/instances